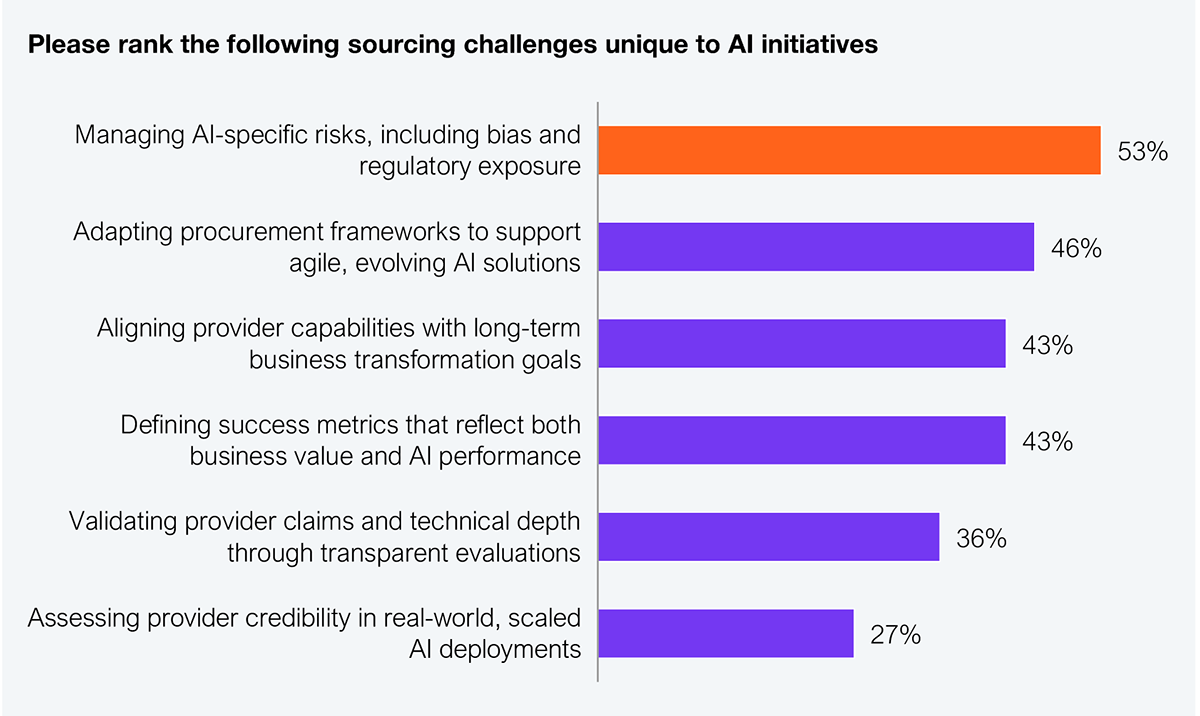

The banking and financial services (BFS) sector is at a pivotal point in AI adoption, with strong momentum already visible in automation and personalization efforts by major players such as JPMorgan Chase and Capital One. However, this enthusiasm is tempered by significant concerns around ethics, compliance, privacy, explainability, and transparency, making BFS leaders hesitant to scale AI across critical operations. A study of 101 BFS organizations revealed that 53% consider managing AI-specific risks, including bias and regulatory exposure, as the most critical sourcing challenge to resolve (see Exhibit 1).

Sample: N=101

Source: HFS Research, 2025

Without ethical safeguards, these systems become liability engines—risking discrimination, privacy violations, and catastrophic compliance failures. For BFS leaders, responsible AI must be the price of admission, not a bonus feature, when choosing a tech or services partner.

Financial institutions have legal and moral obligations to treat customers fairly and not discriminate. However, historical financial data is riddled with bias. Without intervention, AI will scale those biases, not eliminate them. For e.g., an AI-driven loan model that disadvantages certain groups is more than unfair—it’s illegal. It puts institutions in the crosshairs of regulators and public outrage, which can quickly erode their reputation and cause real financial fallout.

Consider a cautionary tale: In 2019, the Goldman Sachs-Apple Card algorithm controversy sparked public outrage after some high-profile customers claimed it offered significantly lower credit limits to women than to men despite their similar financial profiles. Though the investigations found no concrete evidence of discrimination, trust was shaken. Taking the lesson to heart, Goldman Sachs and Apple improved transparency and communication around the algorithm and adjusted policies to be more customer-friendly.

To prevent such pitfalls, BFS firms should seek partners that build for fairness by design, not vendors that offer ethics as an afterthought. This includes using representative datasets, bias audits, and transparent explanations. If AI decisions can’t be justified, they can’t be trusted.

Compliance is another large piece of the puzzle. Banking is one of the most heavily regulated industries in the world, and those regulations are increasingly extending to AI. Financial regulators have made it clear that adopting AI does not exempt banks from the existing rules on fairness, consumer protection, data security, and accountability. In fact, regulators themselves are worried about AI’s risks and are sharpening their oversight. For instance, the Monetary Authority of Singapore (MAS) has defined the FEAT principles—Fairness, Ethics, Accountability, and Transparency—for AI in finance. In the US, fair lending laws (like the Equal Credit Opportunity Act) prohibit discrimination in credit decisions. AI loan models must therefore demonstrate that they don’t unfairly exclude protected groups. European regulations go even further: the GDPR gives individuals the right to meaningful information about automated decisions, and the upcoming EU AI Act (expected to be enacted soon) will likely classify many financial AI applications (e.g., credit scoring) as ‘high risk,’ mandating strict transparency, human oversight, and documentation.

Even global initiatives such as Singapore’s Veritas consortium, co-led by MAS and Accenture, are developing toolkits for financial firms to operationalize such fairness checks in AI systems. By following these emerging best practices and prioritizing ethics at the same level as accuracy, banks can leverage AI solution providers to ensure their algorithms uphold fairness. This, in turn, preserves public trust because customers and regulators will be far more comfortable with AI decisions if they know robust anti-bias measures and ethical guidelines are in place. Many banks are establishing AI governance committees or extending their model risk management frameworks to cover AI models as part of supervisory expectations.

BFS executives demand that their tech partners, AI vendors, and integrators act as co-architects of governance, not just code. That means providing audit trails, model documentation, adverse action explainers, and override mechanisms. BFS enterprises must shut out solution providers that don’t understand the compliance terrain.

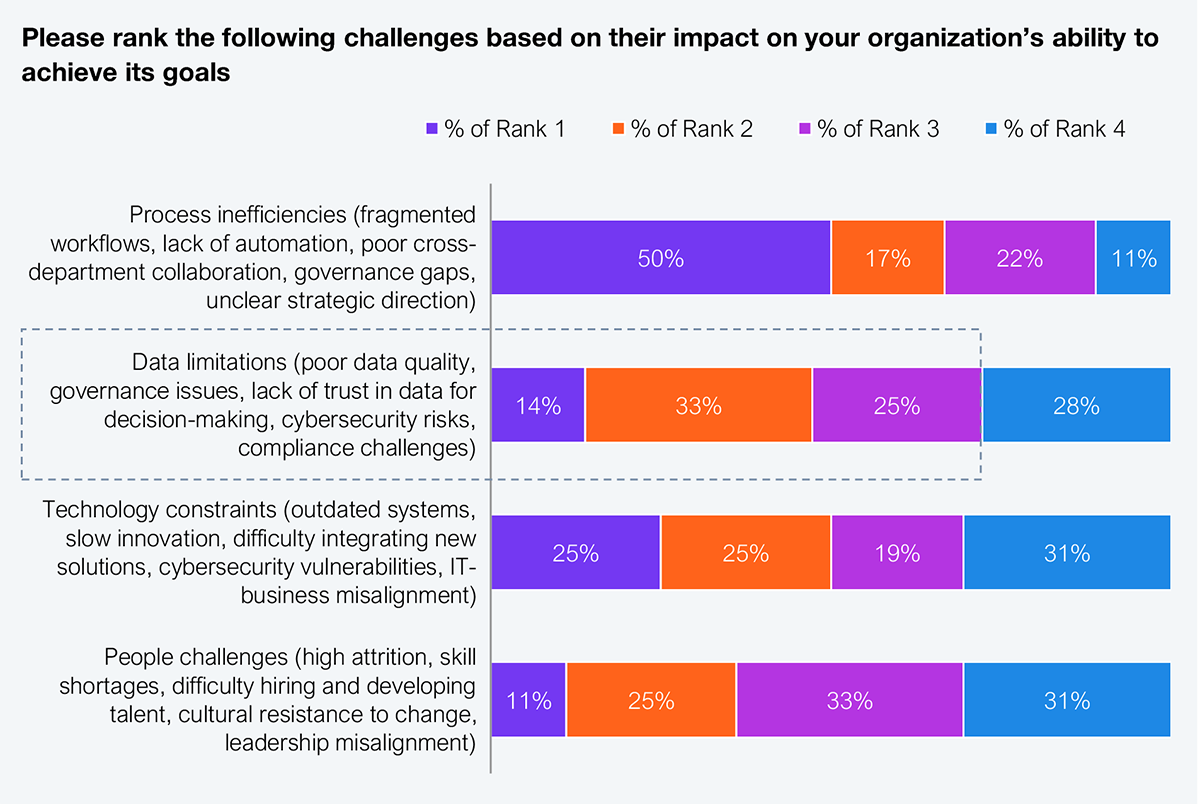

Banks safeguard vast volumes of their customers’ personal and financial data. Naturally, introducing AI raises red flags about privacy, data protection, and cybersecurity (see Exhibit 2).

Sample: N=36 BFS Global 2000 enterprise respondents actively exploring and deploying AI

Source: HFS Research, 2025

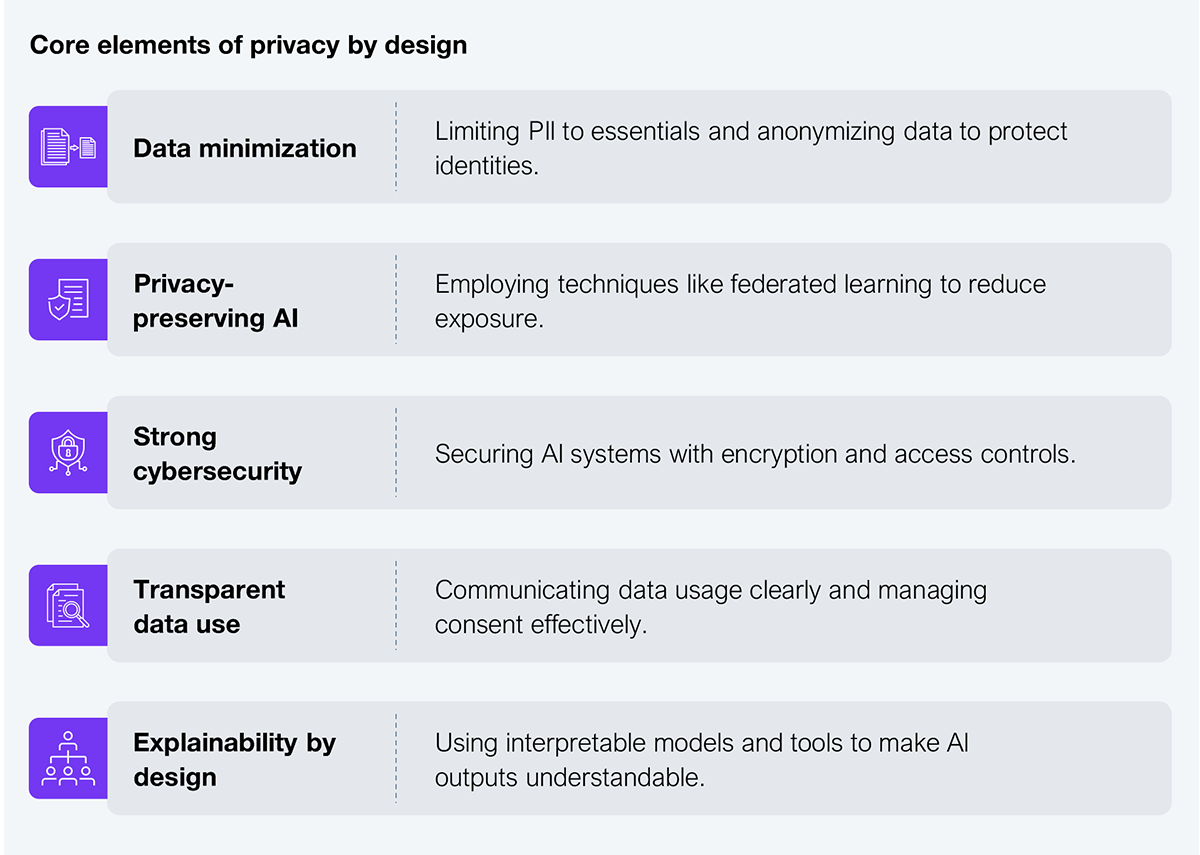

Many advanced AI algorithms thrive on big data, but feeding customer data into AI systems (especially third-party or cloud-based ones) could conflict with privacy laws or internal data-handling policies. BFS enterprises must demand that service providers and tech partners treat privacy as a core design principle (see Exhibit 3), not an afterthought. Privacy by design should prioritize limiting the use of personally identifiable information (PII), preserving the need for privacy, explainability, and transparency and embedding security at the core.

Source: HFS Research, 2025

It’s worth noting that transparency and explainability are also crucial for internal buy-in within banks. Staff are more likely to adopt explainable AI, whereas mysterious output or decisions will meet resistance.

BFS leaders are no longer asking ‘if’ they should adopt AI. They’re asking ‘how’ to adopt it without losing trust, control, and regulatory standing.

Enterprise AI in BFS will not scale without robust guardrails. Ethics, compliance, privacy, transparency, and explainability should not be constraints—they are the foundations on which BFS AI solutions must be built on.

Demand more from your tech partners. Ask for scalable and secure responsible AI proof points, not just demos. Refuse the black box and make trust the competitive differentiator.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started