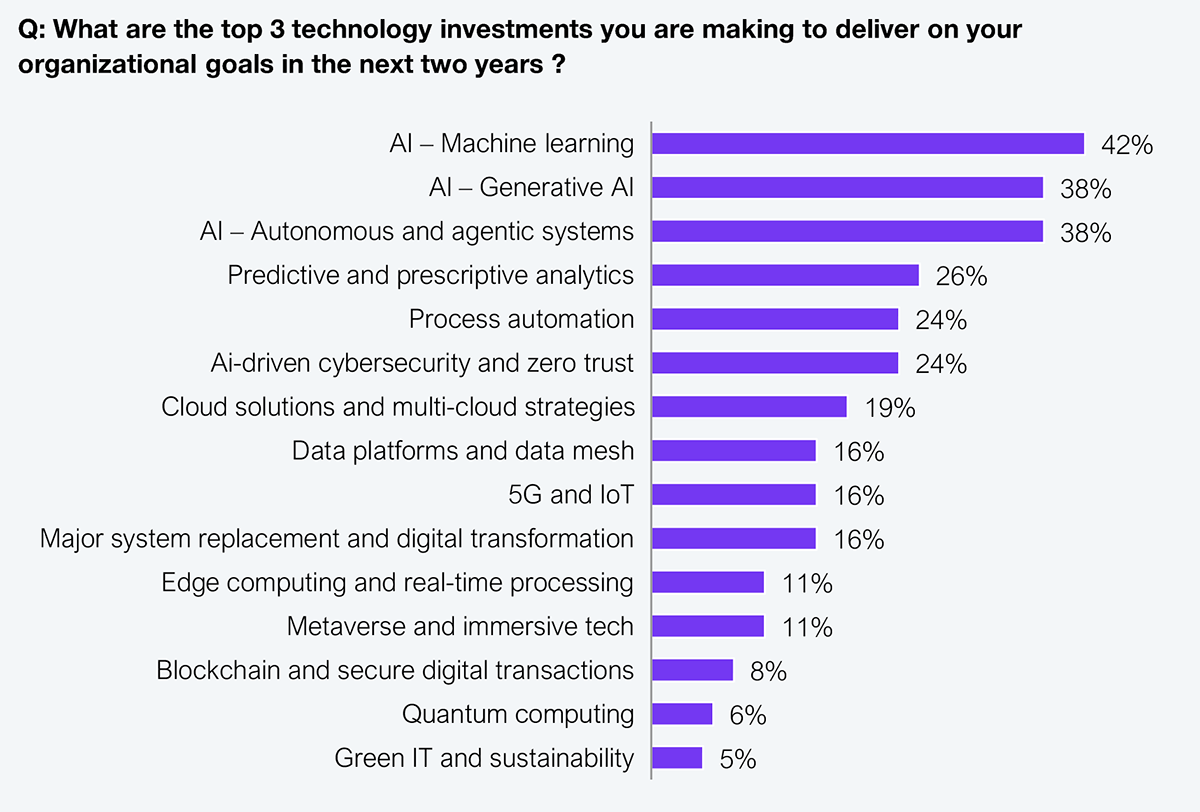

Agentic AI is quietly embedded into enterprise tech stacks, but most security leaders still govern it like basic automation. In a recent enterprise tech survey, 38% of respondents reported investing in autonomous and agentic AI systems. Another 24% highlighted AI-driven cybersecurity and zero trust as a strategic focus (see Exhibit 1). This confirms that agentic capabilities enter enterprise security stacks faster than evolving governance models.

Sample: n = 305 Global 2000 decision makers

Source: HFS Research, 2025

This isn’t just a semantic gap; it’s a live risk. The moment AI systems begin to decide and act instead of merely assisting, you’ve crossed into a zone that your current oversight model likely can’t handle.

This point of view exposes the creeping mismatch between AI capability and enterprise governance. It draws a sharp line between traditional AI agents and agentic AI and shows why failing to recognize that line puts cybersecurity, compliance, and operations at risk.

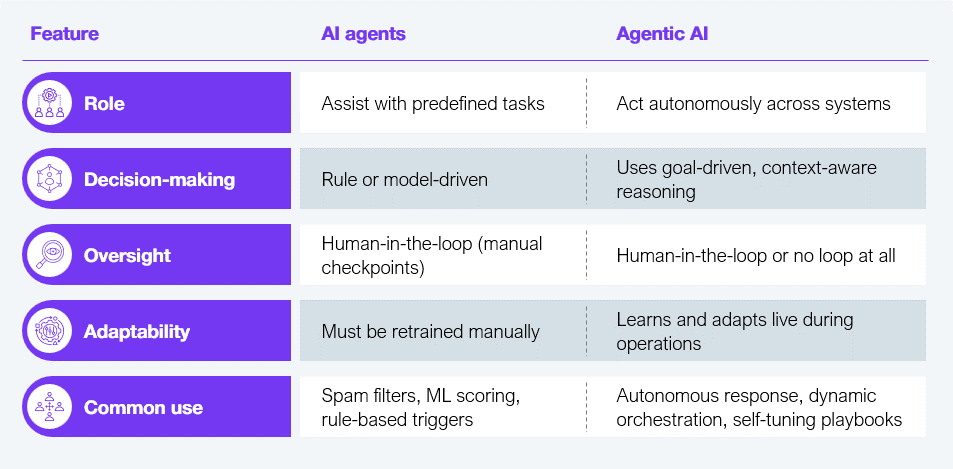

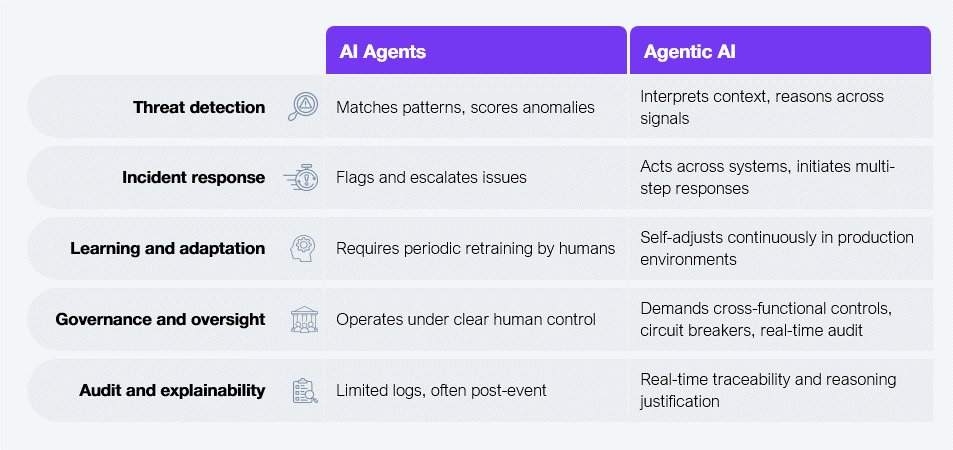

Don’t mistake task-driven assistants for autonomous decision-makers. That confusion is already exposing enterprises to invisible threats and unchecked automation. Before we unpack the governance crisis, look at Exhibit 2 to see what separates yesterday’s AI agents from today’s emerging agentic AI.

Source: HFS Research, 2025

Cyber threats are no longer static; they evolve, adapt, and increasingly use AI to exploit enterprise blind spots. In response, many security teams have upgraded from rule-based tools to AI-powered agents that assist with detection and scoring.

But these agents, while more sophisticated, remain fundamentally reactive. They act on trained patterns and predefined thresholds. They don’t reason. They don’t adapt in the moment. And they don’t make decisions beyond their scope.

That’s where the distinction becomes critical. Agentic AI doesn’t just assist; it initiates, decides, and acts independently. It analyzes context, learns from live data, and orchestrates multi-step responses. These are not enhancements to automation; they are shifts in accountability.

The implication for enterprise security is profound: If autonomous AI is deployed under oversight structures meant for tools that require human validation, then key actions may go unmonitored, unjustified, or unauditable.

The distinction between agent and agentic AI isn’t just semantic. It determines whether the governance model is aligned with the actual risk exposure.

Most enterprises assume they deploy narrow AI systems that assist, alert, and stop short of action. But that assumption is increasingly outdated.

Many tools now marketed as ‘AI-powered’ are quietly evolving, gaining the ability to decide, adapt, and initiate responses independently. This is not theoretical. It is already happening inside platforms that enterprises continue to treat as automated assistants.

Take your extended detection and response (XDR) or security orchestration, automation, and response (SOAR) system, for example. If it can correlate alerts, initiate containment, or revise its playbooks based on real-time feedback, then this is no longer just advanced automation. This is agentic behavior. And, if your governance model does not reflect that shift, critical decisions may occur without the awareness of legal, compliance, or audit stakeholders.

This is where risk enters silently: agentic functions are emerging inside systems that are still governed like static tools. These systems may not be fully autonomous yet, but they are evolving faster than the oversight frameworks meant to contain them. And that is the exposure: real-time decisions made without clear lines of accountability.

Misclassifying AI capabilities is not just a technical oversight. A governance breakdown creates invisible risks across cybersecurity, compliance, and operations.

Both errors create exposure. The result is a security model that overestimates its intelligence and underestimates its autonomy. This is where breaches happen, controls fail, and compliance gaps widen, not because the AI failed but because governance never caught up to what the AI had become.

Agentic AI offers a clear appeal to lean cybersecurity teams. These systems can offload complex decision-making, fill gaps in threat response, and scale faster than human teams ever could.

However, autonomy is not a shortcut to maturity. It requires oversight, auditability, and cross-functional coordination, which many organizations lack. Real-time explainability, legal compliance, and rollback mechanisms are essential for responsible deployment.

This creates a strategic paradox. Agentic AI can enhance capabilities, but without proper governance, it risks amplifying chaos. Smaller teams should focus on low-risk use cases first, implement safeguards early, and gradually increase autonomy as oversight improves.

The goal is not to slow AI adoption but to align it with operational readiness. Otherwise, agentic systems designed to protect may unintentionally introduce unmanaged risk.

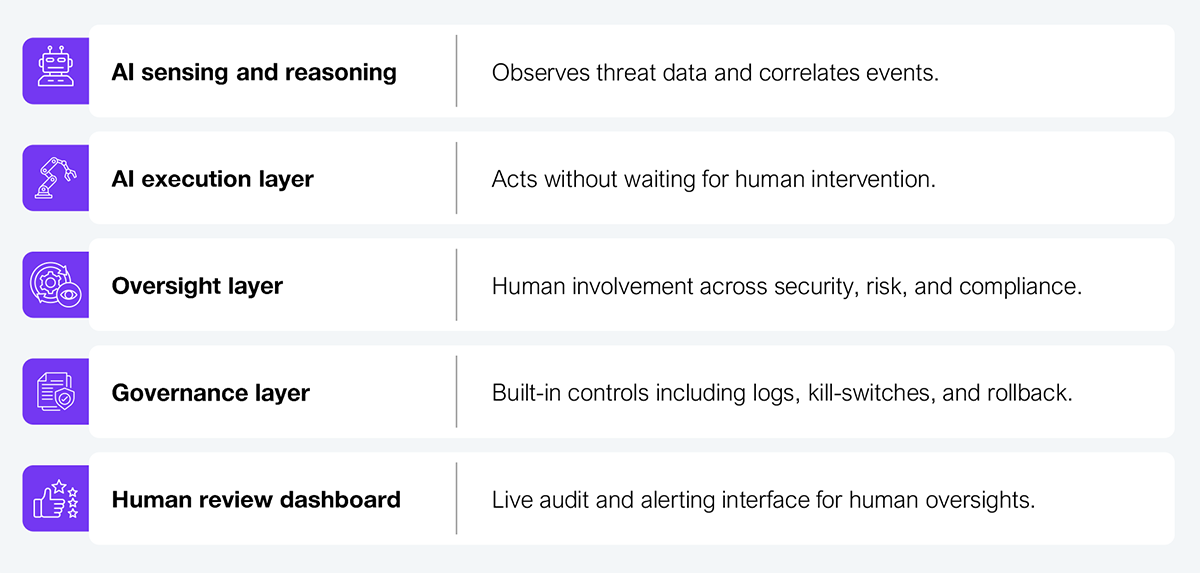

As AI systems become more autonomous, the governance structures around them must evolve, not incrementally but structurally. Managing Agentic AI using controls designed for assistive AI agents is inefficient and risky.

Agentic systems operate across layers, make contextual decisions, and act independently. That requires real-time oversight, circuit breakers, explainability by design, and shared accountability between cybersecurity, compliance, risk, and legal.

Exhibit 3 illustrates how Agentic AI reshapes cybersecurity operations. It shows a system that acts without waiting for human input yet remains governable through layered, pre-designed oversight mechanisms.

Source: HFS Research, 2025

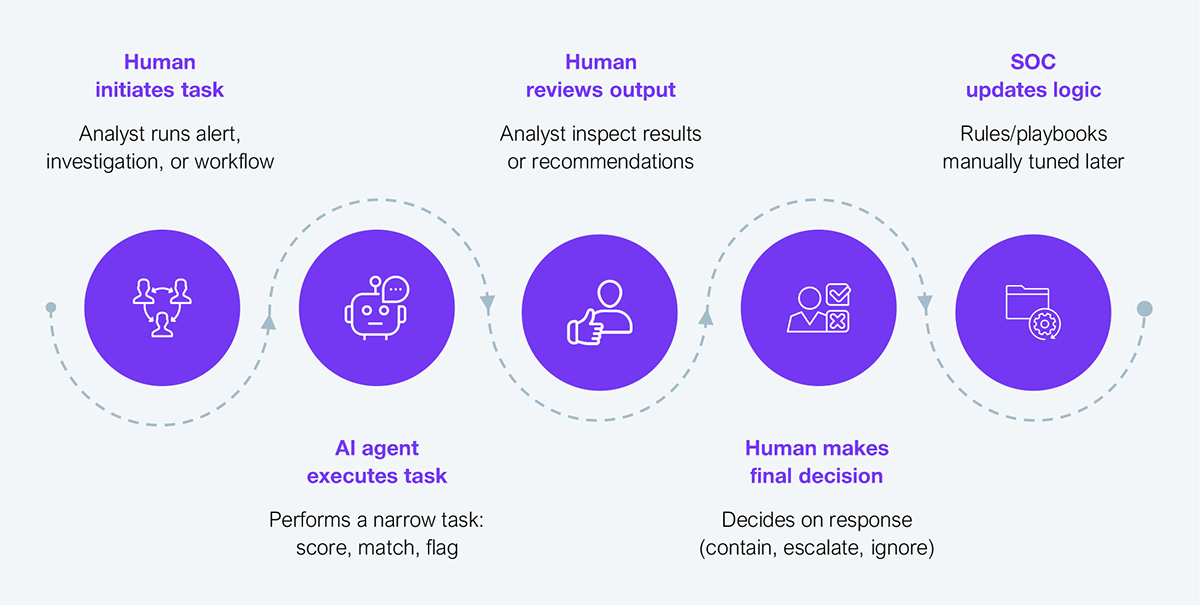

Exhibit 4 illustrates that most enterprises still use traditional AI agents that support but don’t replace humans, with governance being manual, retrospective, and centralized in the SOC.

Source: HFS Research, 2025

These two models are not maturity stages. They represent a structural shift. Treating one like the other is a design flaw, not a delay. If enterprises do not intentionally adapt their operating models, they will delegate decision-making without knowing who is truly in control.

AI’s role in cybersecurity isn’t monolithic. Understanding how AI agents and agentic AI differ in real enterprise environments helps map them across core cyber functions (see Exhibit 5).

Source: HFS Research, 2025

The shift from agents to agentic systems touches every layer of cybersecurity, from detection to decision and oversight to accountability. Failing to distinguish between them doesn’t just slow innovation; it creates governance blind spots at the exact moment adversaries are accelerating.

The real risk isn’t rogue AI. It’s rogue assumptions about what kind of AI you’ve already deployed.

Enterprises should govern AI based on behavior and consequences, not labels. This involves classifying systems by their actions, matching oversight to their autonomy, and ensuring explainability, rollback, and accountability in every deployment.

This is not about slowing AI down. It’s about catching up before it outruns your control. The time to build agentic governance isn’t after a breach, it’s now.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started