Traditional infrastructure is breaking under the weight of AI. From GPU shortages and volatile cloud economics to compliance chaos and fractured data governance, CIOs must control the system, not just keep the lights on.

Infrastructure today is the execution control point for where, how, and whether AI runs. It’s the only layer that orchestrates placement (cloud, edge, on-prem), economics (FinOps and GreenOps), risk (security, sovereignty, resilience), and runtime behavior (performance, scaling, recovery). In an AI-driven enterprise, it is an effective operating system. Cognizant’s new AI Factory shows how quickly infrastructure is being repositioned as this control plane.

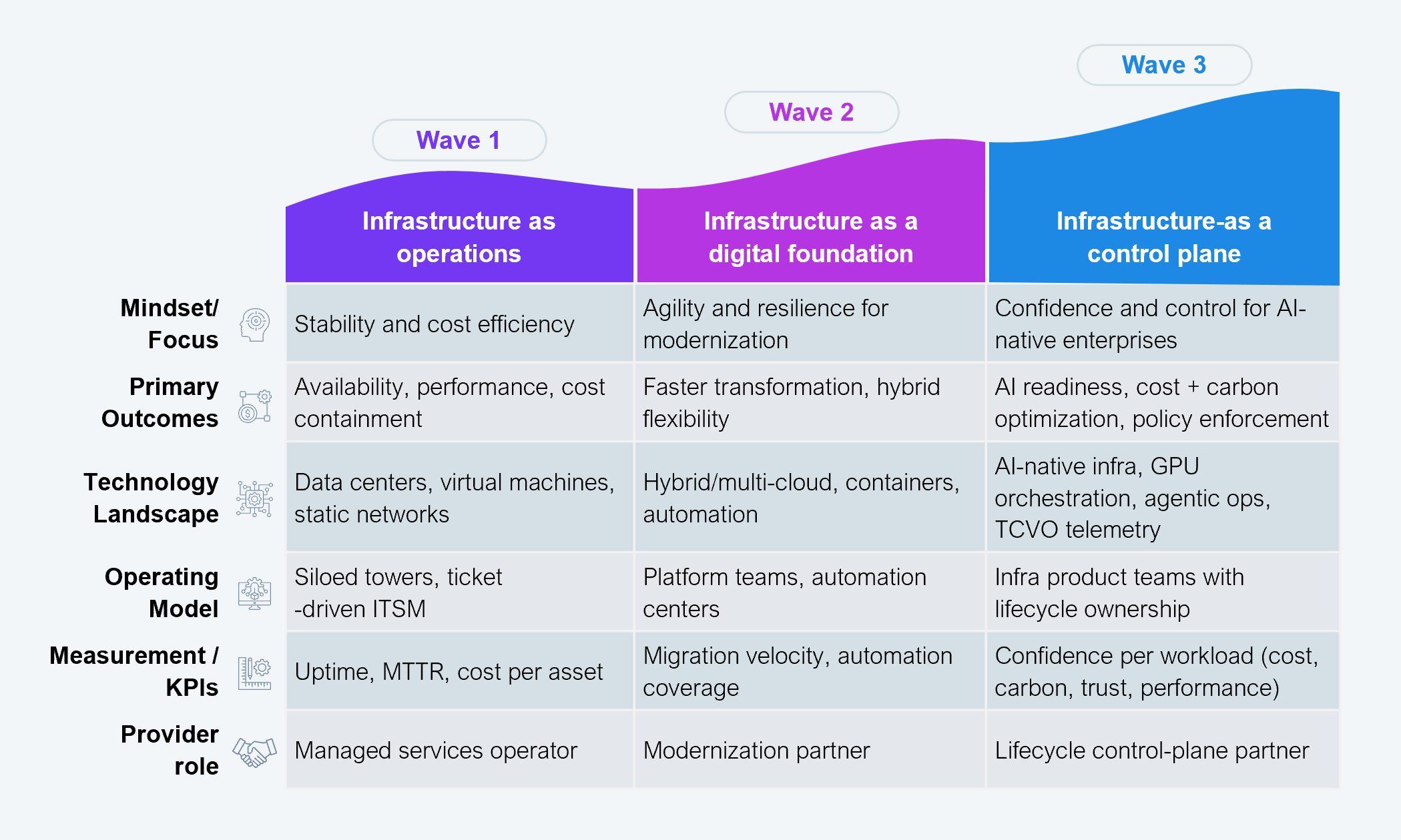

For infrastructure to function as an AI control plane, it must evolve beyond operations into orchestration. This shift is visible in how infrastructure services have progressed across three distinct waves:

Source: HFS Research, 2026

As illustrated, Wave 3 is where we see changes in runtime governance. AI workloads expose the limits of static infrastructure: GPU-intensive models require intelligent scheduling; sovereign data needs enforceable locality; cost must be optimized dynamically; and resilience must operate at business-service levels, and not just infrastructure components. Moreover, control is shifting from tickets and thresholds to telemetry-driven orchestration. CIOs who cling to legacy operations models will bottleneck the very intelligence their businesses need to scale.

This orchestration-centric model allows infrastructure to act as a real control plane for AI by continuously governing placement, cost, risk, and trust as workloads execute. Service providers are addressing this shift by increasingly repositioning infrastructure as an enterprise governance system, not just an execution engine. And it is this model that Cognizant’s AI Factory is designed to operationalize.

Cognizant’s AI Factory is a direct bet on this control plane model. It treats infrastructure as the layer that governs how AI workloads are placed, secured, optimized, and trusted in production.

These capabilities turn infrastructure from a reactive run function into a proactive control system. While the design enables runtime visibility, dynamic resource allocation, and compliance enforcement at scale, enterprise leaders must ensure that such platforms don’t become passive dashboards. The value lies in how consistently they enforce policy, optimize spend, and govern trust in production, not in how elegantly they visualize it.

The enterprise takeaway is clear: it’s no longer enough to expect providers to modernize environments or automate processes. CIOs must demand continuous, policy-led governance of AI workloads at scale. If the infrastructure doesn’t deliver confidence per workload (on cost, carbon, trust, and performance), it’s not ready for the AI-native future.

The future of infrastructure is not defined by faster migrations or automated tickets, but by whether it can govern AI execution with confidence at scale. It’s about establishing a control plane that continuously governs intelligence, risk, cost, and trust across the enterprise.

Cognizant’s next-gen infrastructure direction shows how providers are beginning to shift from operating environments to governing execution at scale. For CIOs, the implication is clear: infrastructure strategy is no longer an execution problem, but a control problem. Those who act now will be best positioned to scale AI with confidence, manage complexity without fragmentation, and turn infrastructure from a cost center into a sustained business enabler.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started