Compliance executives waiting on AI legislation to tell them how to behave are already falling behind customer and market needs. Stop overcomplicating Responsible AI. Build it to meet customer compliance from day one, and you’ll own the trust, lead the market, and help write the rules everyone else will have to follow.

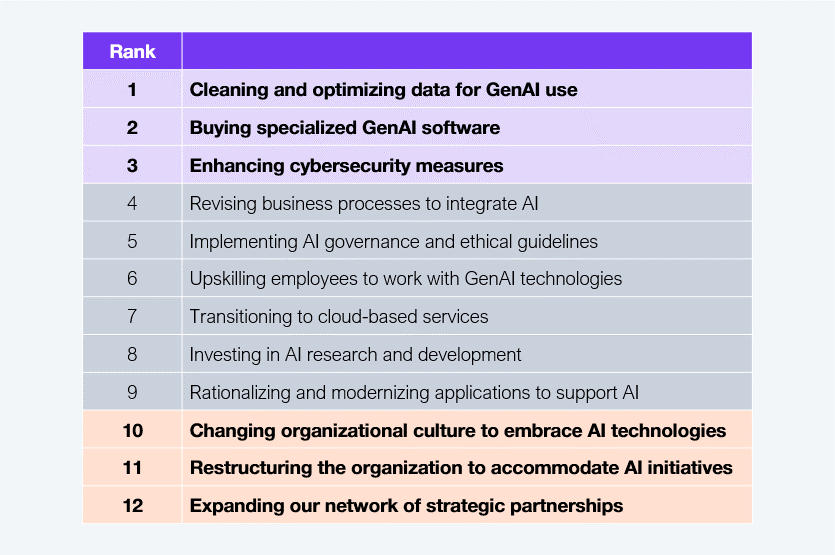

The opportunity to lead suggests most companies have their priorities wrong. AI governance and ethical guidelines remain an also-ran among AI priorities for executives across the G2000 (see Exhibit 1). That has to change.

Sample: 553 executives across global 2000 enterprises

Source: HFS Research in partnership with Infosys, 2025

Legislation is bubbling up across the globe. It includes recent EU demands to share what training data has been used in the AI systems you deploy, 2027 California state rules retrofitting similar demands to anything deployed since 2022, and China’s edict to open your AI books to state regulators.

But the leader who waits on the law to tell them what to do is already missing out on the benefits of proactively meeting customer needs. Taking your ‘Responsible AI’ seriously means getting ahead rather than waiting to meet the slow-moving demands of lawmakers as those requirements emerge slug-like from committee room to courtroom.

We must face facts – the capabilities and availability of AI is moving faster than our outmoded legislative processes. Your customers are experiencing the impact of AI every day, using it, and worrying about the consequences, just as you should be.

So build your Responsible AI to comply with live customer concerns and needs, adapting as those change. Waiting on the law is a drag on keeping pace with the market.

Yes, of course, as a compliance leader, you have to stay on the right side of the law. We aren’t suggesting you dodge those responsibilities. Get across them but see those as your base. We advocate getting ahead of the legal processes. The right side of the law, in this case, is ahead of it.

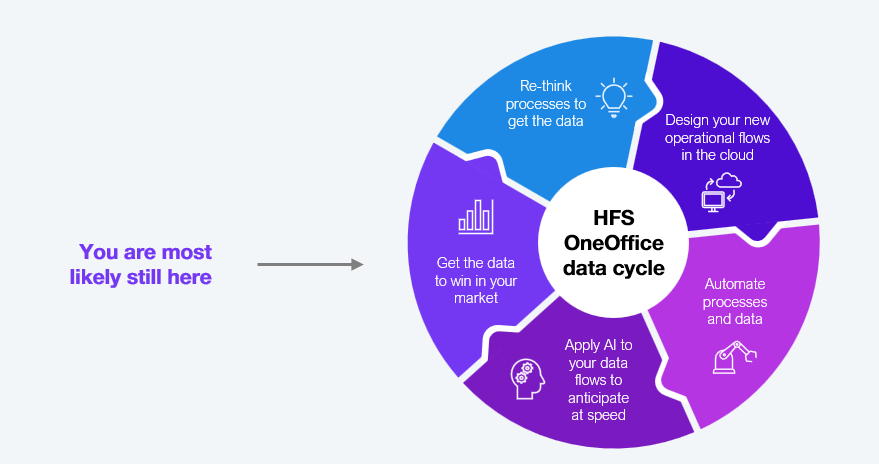

The HFS Data Cycle offers a first-principles framework to help you get the data you need to get close to your customer, understand their needs and attitudes and then respond to that in your use of AI. By repeating the cycle (see Exhibit 2), you keep pace with changes in customer concerns regarding bias, copyright, data privacy, and customer acceptance, or rejection, of the ethical boundaries you set. Integrate this into your customer data cycle to make your customer compliance as central as your meeting of customer demand.

The data cycle aligns with the principles of matching what you do to what your customer needs.

Source: HFS Research, 2025

Applying the HFS Data Cycle approach to Responsible AI makes you a proactive leader while others sit on their hands. It ensures you stay up to the minute with customer responses to changes in AI, enabling you to better serve them, setting the pace for the market, and giving lawmakers a clear steer on what actually works that they should consider for future legal updates.

But this is not your cue to hand over all responsibility to your customers. You must choose your ethical boundaries and act accordingly—as we identified in the HFS blogpost: Only humans can make AI ‘ethical’. Machines make it transparent and accurate.

The pace of legislation is woefully disconnected from the pace of development in AI and from your customers’ reaction to AI capabilities and behaviors. Waiting is for fools. Move now or watch from the sidelines as more customer-compliant competitors grab market share by anticipating change through every customer interaction and updating processes to respond to how the data informs them.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started