Insurers can’t afford to sit out the agentic shift. Inaction locks them into human-centric operating models that are both inefficient and expensive, especially as carriers contend with sprawling systems, rising cost per policy, tightening capital rules, and relentless compliance demands. Add a shrinking talent pool and mounting regulatory pressure, and legacy models are starting to crack.

Agentic AI gives insurers a fresh opportunity to redesign insurance operations by placing digital labor at the core. However, this only works when agents are built with real operational context. Goals, accountability, decision rights, and domain judgment shape how work gets done and how outcomes are owned within a carrier. Sutherland’s AI Hub aims to address this by blending role-based operating logic with AI agents to redesign and modernize insurance operations.

Most insurers treat agents as a technology experiment rather than a part of their new operating model. These agents are built to handle repetitive tasks such as navigating systems, responding to queries, and interacting with customers, but they can never solve real business problems. If an agent can complete a task but not own the outcome, it isn’t agentic but just traditional automation in a new bottle. The result is a proof-of-concept graveyard that fails to move the needle on loss ratios, policy costs, and customer experience.

The missing ingredient is how human employees work with a defined purpose, goals, and boundaries, which helps them plan, make informed decisions, and execute tasks effectively. Unless insurers inject that into agents, humans will always remain within their organization’s real control layer. Embedding that context is enough of a challenge, which is where Sutherland’s AI Hub comes in.

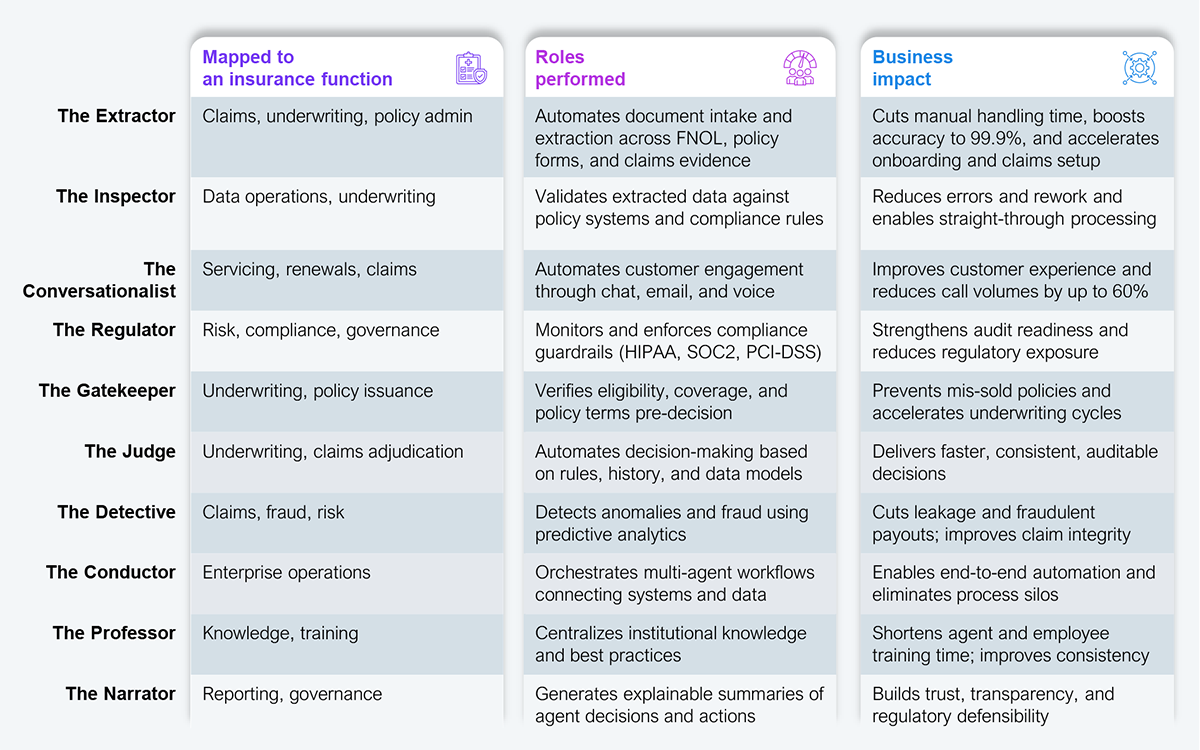

Sutherland recognized that most agents stumble because they’re not trained on the realities of human-based roles. Its AI Hub, launched in 2025 and a culmination of more than a decade of product and technology innovation, aims to change that by harnessing its deep domain expertise to codify best practices into role-based agents that mirror how work truly happens within a carrier. Each persona is mapped to a real operational job (see Exhibit 1).

Source: Sutherland, 2026

These roles aren’t like isolated bots chasing ROI metrics. They operate as a stitched-together virtual team with real role-level context, carrying a goal from intake to outcome. It’s not AI imitating people, but AI working the way people do and with people in a collaborative, contextual, and purposeful way. For insurers, this will translate into faster cycle times and reduced cost per policy among other benefits.

Role-based operating context alone isn’t enough. Insurers need a governed tech stack to avoid poorly connected agents with unclear authority, limited observability, and a lack of trust, what we call “agentic slop.” Ultimately, it’s the technology underneath that makes agents work. To eliminate that, Sutherland has integrated three layers (explained below) into the AI Hub, bringing accountability, clarity, and intelligence to every agent.

The caveat is that most insurers are still working through their foundational challenges. Core system fragmentation, inconsistent data pipelines, and unclear process ownership remain widespread. Agentic AI doesn’t bypass these weaknesses but exposes them. When underlying systems, data flows, operating controls, and context are poorly defined, multi-platform, multi-partner agent environments quickly become heavy and brittle. Fixing the foundation isn’t a box already checked, but a parallel journey of deliberate context engineering. This is where the hardest work and the highest value creation still sit.

Sutherland’s role-based agents are already in action. A US-based insurer, for instance, is using them to manage daily benefits enrollment cases that require data sufficiency and data accuracy checks. Another carrier operating a scaled multi-agent setup has five agents working alongside a supervisor agent that sets priorities and orchestrates the order of task execution within defined guardrails.

Such implementations illustrate how you can build a role-based agent workforce:

Redesigning the operating model with digital labor at the core is imperative to stay competitive. Those that treat agents like a real member of their workforce have a real chance to redesign their operating models for the AI era. Those that don’t will just add more layers of human and technology complexity. Eventually, success hinges on choosing the right partner with deep domain expertise in providing real human context to agents.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started