In early 2025, HFS Research published Why, what, and how financial services firms can be AI-First, challenging a banking and financial services (BFS) industry narrative where “AI-first” was widely claimed but largely limited to pilots. Since then, the focus has shifted from whether to adopt AI to where to scale it for meaningful impact beyond productivity gains.

Against this backdrop, HFS Research and Infosys recently convened a closed-door banking roundtable (Exhibit 1) to explore the friction that emerges when AI meets regulated, risk-averse environments. As banks move beyond “a thousand proofs of concept” toward selective scaling and early agentic deployments, progress remains constrained by a hard truth: AI cannot guarantee deterministic accuracy in a sector that demands it. This report distills the tension between banking’s need for deterministic outcomes and AI’s probabilistic nature, along with other critical challenges, into a set of practical AI reality checks, helping banking leaders learn from peers’ successes, failures, and course corrections, echoing one participant’s emphasis on the value of peer learning.

There is risk in going too fast, there is definite risk in going slow, and there is death in not moving. It’s important for all of us to share information, share success stories, and learn from each other.

— Vikram Nafde, CIO, Webster Bank

Source: HFS Research in partnership with Infosys, 2026.

Banking operates on a deterministic spine, where auditability, repeatability, and accuracy are nonnegotiable. By contrast, AI systems are probabilistic. Instead of following fixed rules, they learn statistical patterns from large volumes of data and generate outputs based on what is most likely to be correct. This enables AI to produce useful results even when information is incomplete, but it can also lead to variability in outcomes.

As AI moves into higher-risk and more complex tasks in BFS, probabilistic systems collide with these requirements. Until AI can consistently demonstrate factual reliability and defensible confidence, banks will not allow it to operate autonomously. The cost of failure is simply too high.

Roundtable participants noted that even advanced models stumble with multi-step calculations that are routine in banking. Humans, by contrast, are trusted because they are identifiable, traceable, and accountable, with reliability proven over time.

The HFS Take: This is not AI versus people; it’s people with AI outperforming those without it. AI will increasingly inform and accelerate decisions, but human accountability will remain in the final control layer.

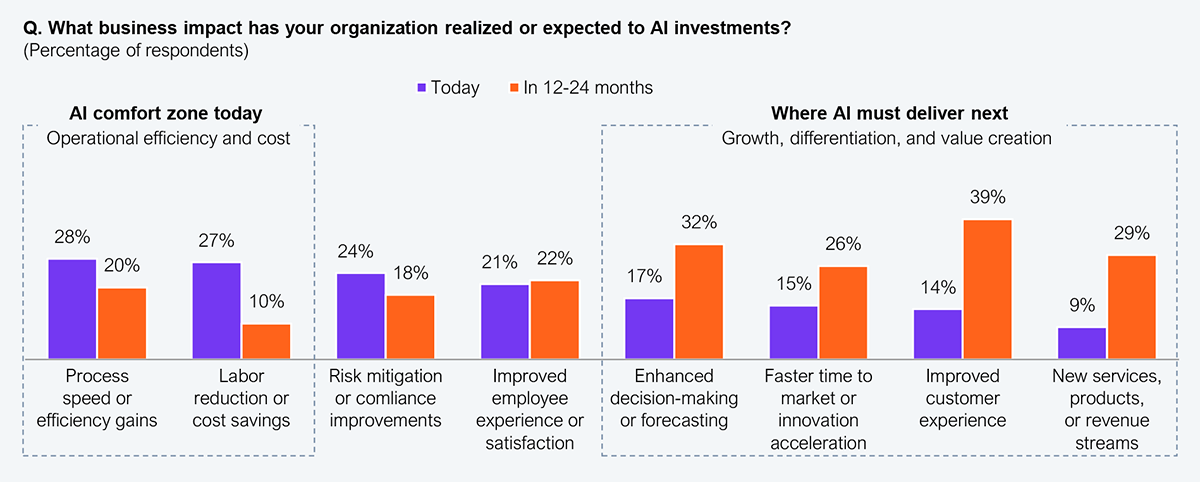

Productivity is increasingly commoditized as an outcome of AI. As shown in Exhibit 2, efficiency and cost improvements, which have long been treated as the primary productivity levers, are now table stakes. The room showed a three-way split on AI outcomes:

1. Some firms are still chasing productivity because it’s an easy win, a low-hanging fruit from simple, siloed use cases. But as use cases evolve from basic to complex, those productivity gains flatten.

2. Others are pushing beyond the baseline efficiency wins, a point underscored by the AI leader at U.S. Bank.

AI has clearly started to deliver value beyond productivity. While efficiency use cases still dominate in volume, the real value is now coming from personalization, prediction, and growth-oriented use cases.

— Ashok Panduranga, SVP AI and Automation Lead Executive, U.S. Bank

3. A third camp is playing the longer game, prioritizing adoption first, building enterprise muscle and culture, and letting ROI follow rather than forcing it upfront, as Dennis Gada pressed the group to recognize.

If you democratize AI, build the muscle, and embed it into how people work, the ROI will come. You don’t have to force it in the short term

— Dennis Gada, EVP Banking & Financial Services, Infosys

Sample: Leaders from 100 Global 2000 financial services firms

Source: HFS Research, 2026

The HFS Take: BFS firms that rewire processes (not blow them up), harden infrastructure, harness models effectively, and fix data at the source are escaping the productivity trap. Without this shift, they risk forfeiting the next rung on the value continuum.

Banks are leaning into agentic AI in 2026, but orchestration ultimately comes down to two factors: control and cost. For many BFS firms, agentic capabilities remain aspirational, constrained by unresolved guardrails, governance, and regulatory comfort. That said, the discussion surfaced real progress, including one delegate deploying sub-agents behind consumer applications supporting tens of millions of daily active users.

Infrastructure is the other gating factor. Heavier reasoning workloads and multi-agent execution are pushing banks toward GPU-centric compute, with AI capital expenditures (capex) up approximately 30% year over year, making GPU access and cost the primary constraints on scaling agentic AI.

The HFS Take: Banks can’t dodge the AI bill. They must plan for cost volatility, assign explicit line items to AI consumption, and treat GPU economics as a first-order scaling decision.

In BFS functions where 100% accuracy is nonnegotiable, humans will continue to be in control. But banks cannot afford to wait for “perfect trust” before scaling AI. Even selective scaling demands careful planning across cost, infrastructure, data, models, processes, and controls, with a clear ambition to move up the value continuum over time.

Valuable insight and deep discussion are why industry leaders turn to HFS Roundtables. They want to validate what works, understand what fails, and identify where they need to recalibrate strategies and operating models.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started