IBM dropped $11 billion on Confluent on Monday, December 8. It’s essentially after the data streaming layer that it has never been able to build itself. This deal creates a data fabric for CIOs and transformation leaders juggling hybrid cloud, AI roadmaps, and Kafka sprawl. The biggest promise is that you can now connect applications, data, and agents in real-time, but not without the risk of getting entangled in Big Blue’s orbit.

The roughly one-third premium on Confluent’s stock price ($31 per share versus just over $23 at the close of trading on Friday) suggests IBM is admittedly paying for something much bigger than a topline bump. Confluent comes with battle-tested Kafka infrastructure, hundreds of connectors, and a massive enterprise footprint. Now this provides instant streaming credibility to IBM, which its years of middleware investments never delivered.

For enterprises already deeply invested in IBM’s hybrid cloud and data stack, the question is how far to let IBM own the streaming layer that connects their AI programs. IBM can now offer interoperability between ingestion, streaming, governance, and AI consumption. The promise is a single partner to design, run, and secure the data supply chain AI depends on.

But…and this is a big but… Confluent’s core appeal has always been its neutrality. These folks and their platform work across AWS, Azure, Google, and on-premises, essentially wherever their clients require. Now Confluent is part of the IBM universe, and the question is whether its Switzerland positioning will survive, or will it slowly morph into “xyz works best with watsonx?” Enterprises ought to be skeptical.

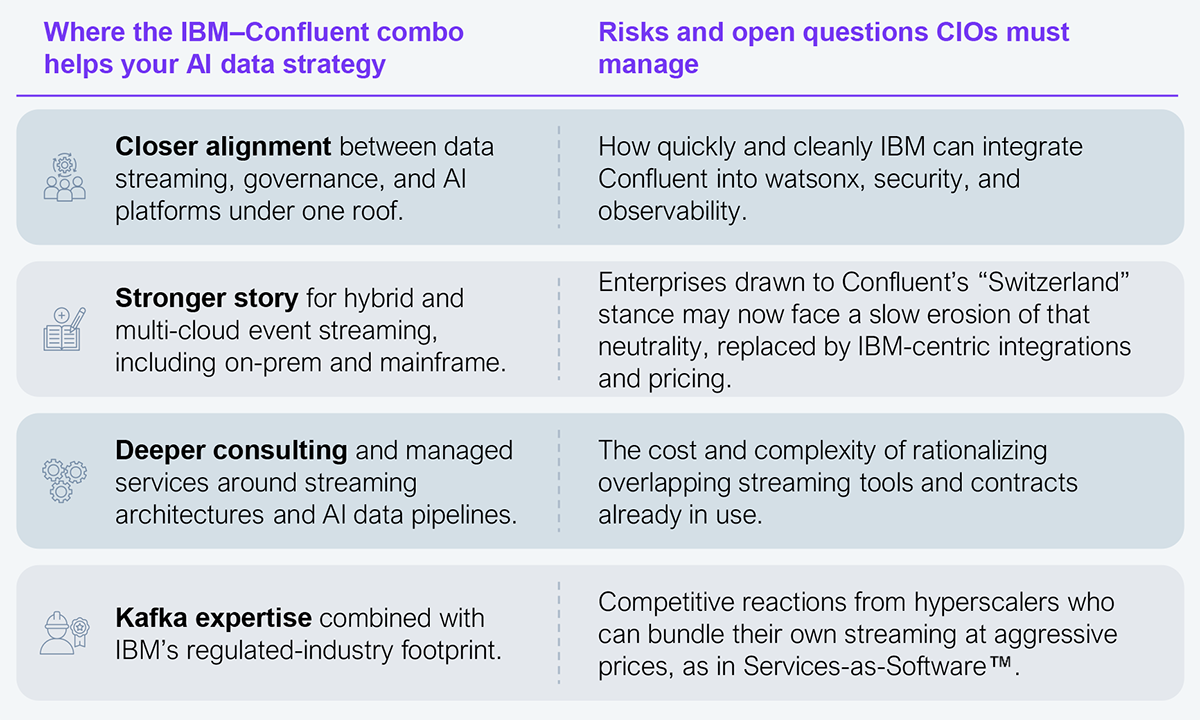

Through an enterprise lens, the trade-off looks like Exhibit 1.

Source: HFS Research, 2025

Real-time streams are becoming the rails for AI agents as batch analytics won’t cut it anymore. Platform consolidation is forcing hard choices about who owns your data plane. And multi-cloud? You’ll need to actively negotiate it, because neutral tools are getting absorbed into platform plays that reshape pricing overnight.

IBM has been assembling a stack that runs from infrastructure to data workflows to AI output. Red Hat provides a standard fabric for hybrid infrastructure, HashiCorp helps provision and secure that fabric, and Confluent now sits in the middle as the real-time data and events layer that keeps applications and AI agents aligned. The broader industry is converging on the idea that successful AI programs are fundamentally data engineering and integration programs. This move brings IBM closer to that center of gravity.

Use this deal as leverage. Demand clear integration roadmaps and timelines, explicit protection for Confluent’s openness, and transparency on pricing and migration paths. Don’t just blindly accept being locked into one vendor because it seems easier.

Register now for immediate access of HFS' research, data and forward looking trends.

Get StartedIf you don't have an account, Register here |

Register now for immediate access of HFS' research, data and forward looking trends.

Get Started